A Conversation with One of My 8090 Co-Founders

I talked to Sina Sojoodi (X/LinkedIn), one of my co-founders at 8090, after we published our Deep Dive on AI last week. Let me know if this is interesting to you.

Chamath: There’s this great story of how OpenAI accidentally discovered LLMs. Walk me through what happened there.

Sina: LLM Grokking – this is funny. It's almost like how penicillin was discovered.

OpenAI made this discovery where typically in traditional machine learning, there's a point of overfitting, where you stop training because the model is just memorizing the training set rather than learning to generalize.

But OpenAI accidentally ran their experiment for days instead of hours, well beyond this overfitting point. And they reached what they call the 'grokking zone', where somehow, even though the training should have been completed many times over, the model started to actually understand concepts and show emergent behaviors beyond memorization.

This discovery completely changed how we approach training transformer models. Instead of following traditional machine learning wisdom to avoid overfitting, we now know that transformers actually benefit from training far beyond conventional limits. And the scaling laws that emerged from this work give us precise formulas for the resources needed – parameters, compute, and data – to reach these emergent capabilities.

They discovered this phenomenon, basically by accident. And that's the story of deep learning, to some degree. It's more about trial and error and trying a bunch of stuff than theory, although having a mental model for how it works does help.

Chamath: Recently, I decided to use the word "brain" instead of saying "AI". What are your thoughts on that analogy?

Sina: The first thing that comes to mind is the incredible complexity of neurons in the human brain. Each biological neuron is like its own universe of computation. It's doing these incredibly complex operations, it has its own learning mechanisms, and it maintains its own state. When we talk about the brain having 100 trillion connections between biological neurons, in some sense, that number doesn't even capture the full power of a human brain because each biological synapse is its own sophisticated processor.

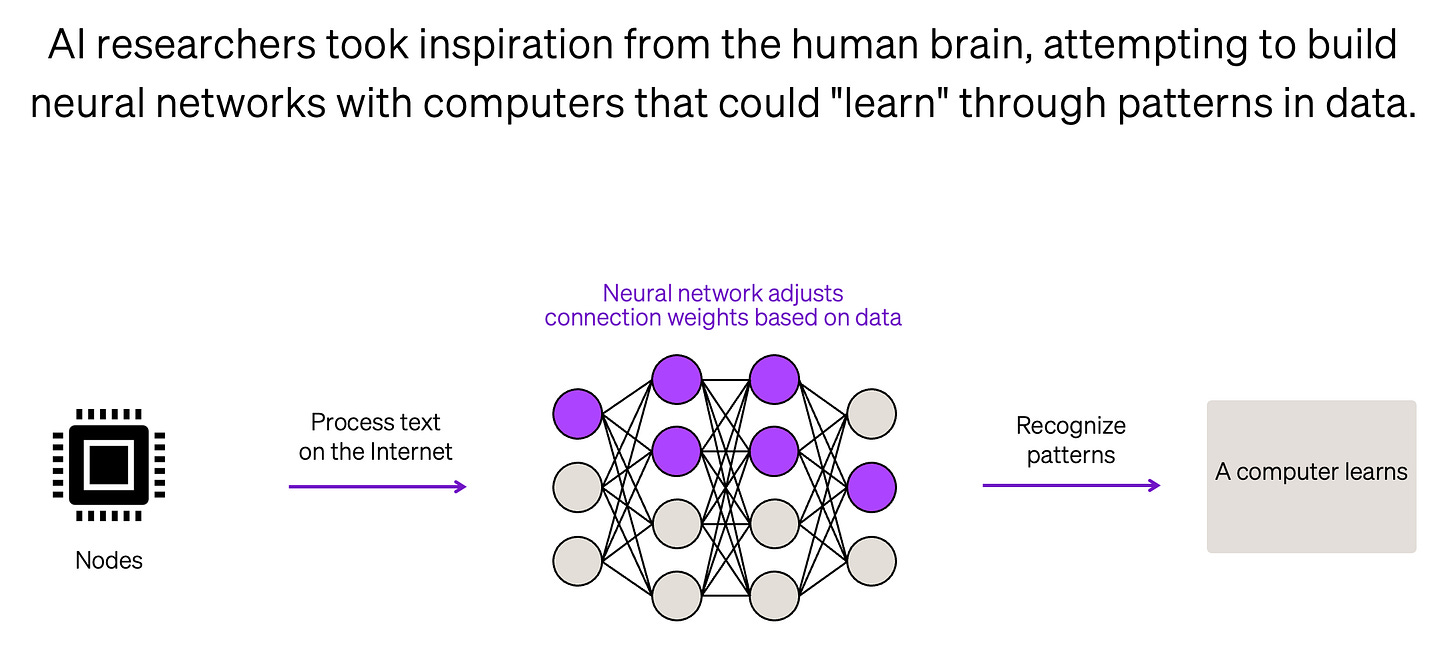

Artificial neural networks took inspiration from this but went in a different direction. We simplified our artificial neurons down to basic weights and biases – basically y = Wx + b. While artificial neural networks might have billions of artificial neurons, each one is just doing these elementary mathematical operations: multiply inputs by weights, add a bias, run through an activation function.

The trade-offs between these approaches are stark. Biological brains excel at power efficiency but operate in milliseconds, while artificial neurons can process information billions of times faster on H100s but consume massive amounts of energy to do so.

The learning mechanisms are fundamentally different too. Nature spent a billion years evolving these incredibly sophisticated biological systems, while we're trying to accelerate that evolution with artificial neurons through massive computational parallelism and gradient descent. Both approaches work, just in fundamentally different ways: biological evolution through natural selection over eons versus algorithmic optimization over weeks of training.

It reminds me of the whole birds and airplanes parallel. Both achieve flight, but through completely different engineering approaches.